Learning robust controllers that work across many partially observable environments - Robohub

Source: robohub

Published: 11/27/2025

To read the full content, please visit the original article.

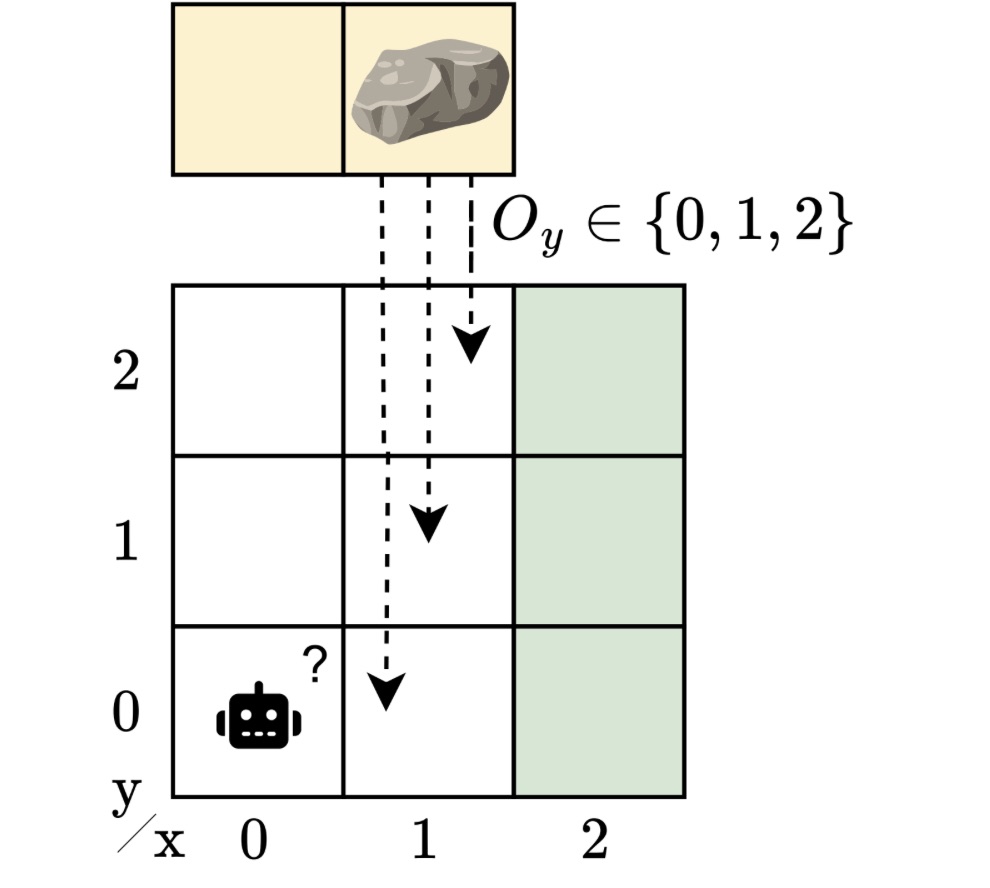

Read original articleThe article discusses the challenge of designing robust controllers for intelligent systems operating in partially observable and uncertain environments, such as autonomous robots navigating with incomplete information. Traditional models like partially observable Markov decision processes (POMDPs) handle uncertainty in observations and actions but assume a fixed environment model, limiting their ability to generalize across variations in system dynamics or sensor noise. To address this, the authors introduce the hidden-model POMDP (HM-POMDP) framework, which represents a set of possible environments sharing structure but differing in dynamics or rewards. Controllers designed under HM-POMDPs must perform reliably across all these variations, ensuring robustness by optimizing for worst-case performance.

The key innovation is the development of robust finite-memory controllers that incorporate internal memory states to handle partial observability and model uncertainty simultaneously. These finite-state controllers are practical and efficient policy representations that update their internal states based on observations and actions, enabling better decision-making despite incomplete information. The article highlights the authors’ IJCAI 202

Tags

roboticsautonomous-systemscontrol-systemspartially-observable-environmentsPOMDProbust-controllersmachine-learning