Microsoft debuts Maia 200 AI chip promising 3x inference performance

Source: interestingengineering

Author: @IntEngineering

Published: 1/26/2026

To read the full content, please visit the original article.

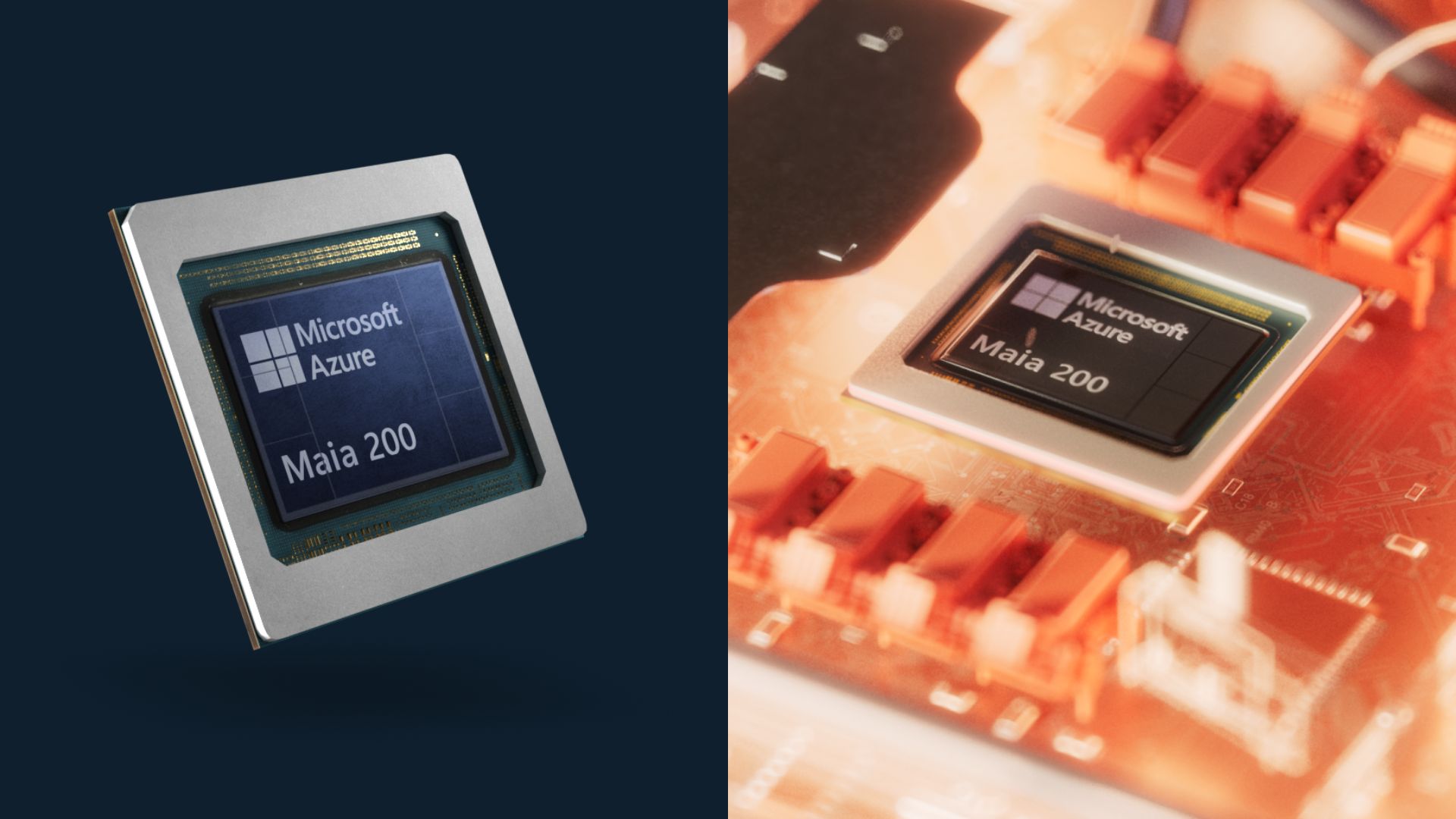

Read original articleMicrosoft has unveiled the Maia 200, its second-generation AI chip specifically designed to optimize inference workloads—the continuous process of serving AI responses—addressing the rising costs associated with running large AI models at scale. Building on the Maia 100 introduced in 2023, the Maia 200 significantly boosts performance, featuring over 100 billion transistors and delivering more than 10 petaflops of compute at 4-bit precision (around 5 petaflops at 8-bit). The chip emphasizes speed, stability, and power efficiency, incorporating a large amount of fast SRAM memory to reduce latency during repeated queries, which is critical for handling spikes in user traffic in AI services like chatbots and copilots. Microsoft has deployed the Maia 200 in data centers in Iowa, with plans for further deployment in Arizona.

Strategically, Maia 200 represents Microsoft's effort to reduce dependence on NVIDIA, the dominant player in AI hardware, by offering competitive performance and an alternative ecosystem. Microsoft claims the Maia

Tags

AI-chipsemiconductorMicrosoft-Maia-200AI-inferencehigh-performance-computingcloud-infrastructureTSMC-3nm-technology